I joined my previous employer, ThoughtWorks, round about 6 years ago. It has been a fantastic journey. I can certainly say that the vibrant (and a bit chaotic) environment helped me, to again and again become a better version of myself. I got an incredible amount of feedback from many different people with vast experience in many different fields of “technology”. This was a key enabler for me over the past years.

I joined ThoughtWorks to improve my “QA Engineering” skill, especially in End-To-End Test Automation. And uhh… how much did I have to learn! Thanks to Dani, Mario, Nadine and Robert (who were the QAs at ThoughtWorks when I joined), I quickly discovered that the scope in which you can increase the level of quality was massive. So much more than I could have imagined before.

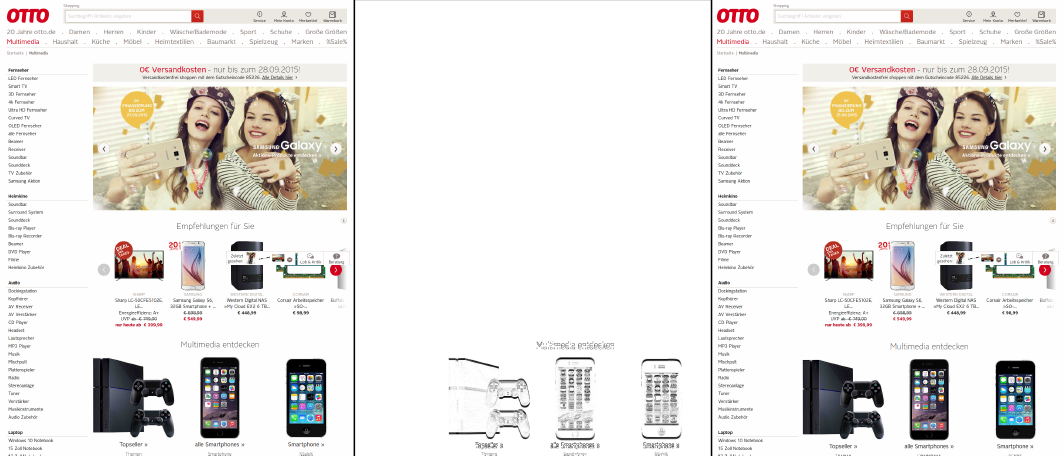

I joined Otto as my first project and many more followed. I gathered experience in 15 different teams over the course of those 6 years. They distributed across all kinds of tech stacks, domains, companies, projects, locations… One thing was always the same though: all teams had errors in the software. Since starting my career I’ve seen all kinds of Data Storage (SQL, PostgreSQL, MariaDB, CouchDB, MongoDB, Cassandra, Redis, Elastic Search, Splunk, DynamoDB, S3, …) and worked with all kinds of Programming Languages (Turbo Pascal, Fortran, C++, Ruby/Rails, Javascript, Swift, Java, Clojure, Kolin, Scala, Typescript, …). However, regardless of the technology in use, there were always errors.

And isn’t that odd? Let me repeat that last part: “Regardless of the technology in use …”. That would mean that the technology is not the problem. And since we already make errors when we use technology to solve errors in the real world, let me suggest to not only rely on technology to solve errors in technology. The conclusion, however, is that we should take a step back from “test-automation-everything” and admire the core problem a bit longer. And ultimatley, it is easy to spot: The one common denominator, the one factor which contributed to errors in all those systems is us – us Humans.

Whoever worked with me in the past years will have noticed, that I started to focus not only on the technological part and some kind of governance ( = “the process” ), but to equally look into team dynamics. And to understand how information is transported through the team. How requirements were very clear to everyone – or more often not. Using Conway’s law in reverse, I am trying to build teams that resemble a high quality human-interaction so that their services and software display this very same attribute. The only way to build teams in this way, is to make “Quality” (for whatever “Quality” means in detail for a specific context) an integral part of a team’s culture.

The problem is, that it is difficult to map a job with the Title “team culture enablement to improve the Quality of Software-Products using Convey’s law in reverse” into the QA career path of a high performing global cutting-edge technology consultancy 🙂 In the beginning of my employment with ThoughtWorks, it was a great journey, as a QA, since the environment allowed me to “shift left” and “shift right” and “deep dive” in my role. All at the same time! I am very grateful for this opportunity that was given to me. I am thankful for the room, my colleagues gave to me. I hope I could also deliver on their expectations!

However, the further my journey took me, the more I noticed how limited a “QA Role” was defined for the clients I worked with. Hence, for every new project I had to emancipate from the initial client’s role understanding and evolve this together with the client in a positive and constructive way. After some time, even this small cultural transformation becomes repetitive and then tedious.

But it must have been fate: At some point I joined a client organization, where there were many interesting and wild thoughts in those directions around “Quality”. It was a Hamburg Startup. The Name of this company is MOIA.

However, before I dive into the specifics of MOIA, I have to do the unavoidable detour for a bit of context setting: I joined the MOIA-project about half a year before the Corona Virus hit us. Once we were in the middle of the pandemic, my family reflected how we wanted to shape our future and whether we see this future in a world-famous metropolis or – and this is what we ultimately decided for – rather in the country side. Hence, we shifted gears and started to look for a new home in a small village in the middle of Schleswig-Holstein. The aspect of more Home-Office obviously contributed to paint this picture of our family-future. With Moia having the majority of their tech development in Hamburg, it was a perfect match to shape a new path forward ❤

By the time that we concluded our family plans, I had also established deeper connections (I want to say: roots!!) within MOIA. Back in the days of the first Corona wave, I worked with one of the best and most empathetic teams I ever had the pleasure to work with. And all of those fine folks were from MOIA! Besides, the current set of challenges is extremely interesting: a distributed system of many services and apps and web technologies. And a great demand on Quality for the end product. However, in many places the bi-weekly releases are still served with big amounts of manual regression testing. What a Shocker! Someone needs to do something about this! And I want to believe that those are areas, where I can contirbute to.

And the best thing is: The company has an amazing culture of trustful and constructive collaboration. New ideas are welcome and (sometimes more, sometimes less) enthusiastically implemented 🙂 Appreciation is given towards each other freely and frequently.

Furthermore, MOIA is a company with a great Vision. MOIA participates on the endeavour to change our cities, to very actively contribute to one puzzle piece of the “Verkehrswende” (= transformation of mobility and transportation in Germany). I found a role where I can contribute to the culture of many teams and still have a direct impact on the Quality, the processes around it and the Strategies and Approaches (and also to testing throughout the entire company). This is a huge, difficult and quite complex task.

I am looking forward to truly immerse myself into this task, to really and wholeheartedly fall in Love with the Product that I am am helping to build. To continue to work with great people that I’ve met over the last two years and to contribute to shape a future for MOIA and our cities. And to plant some seeds in a company culture. To grow them and to cater to their needs for many years – and not months.

I am grateful for my journey up until now, which brought me to this point. My thanks go to all the people who contributed to the experiences that I have and who definitely had their share in making me the person that I am.

The

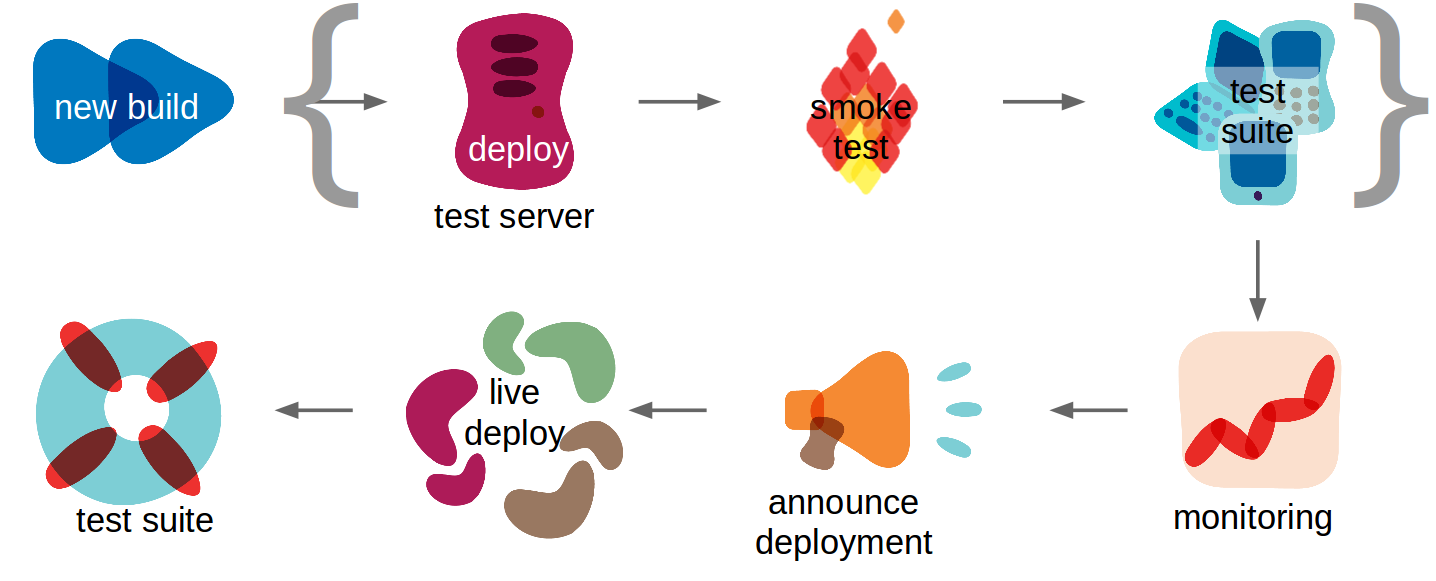

The  The next step is to deploy a green build to a test server and continue testing the new software. Talking about deployments, one often forgets that it is code executing all the steps necessary to provision a server with new software. Even this code can fail and thus, we recommend a small smoke test to be executed right after the deployment. This can be as easy as checking the version number on a status page or the git-hash in the meta information on the front page. You will save a lot of time to not execute tests on old code.

The next step is to deploy a green build to a test server and continue testing the new software. Talking about deployments, one often forgets that it is code executing all the steps necessary to provision a server with new software. Even this code can fail and thus, we recommend a small smoke test to be executed right after the deployment. This can be as easy as checking the version number on a status page or the git-hash in the meta information on the front page. You will save a lot of time to not execute tests on old code. Having the software successfully deployed to the test server, we then continue testing. After covering the base of the test pyramid in the build step, we now take care of the top of it. Here we will execute more acceptance and functional tests, some of them in Selenium. Furthermore, we can run first integration tests with other teams, other services and maybe third party software. For integration testing, we do not rely on Selenium alone. We have a wide set of so called CDC tests (

Having the software successfully deployed to the test server, we then continue testing. After covering the base of the test pyramid in the build step, we now take care of the top of it. Here we will execute more acceptance and functional tests, some of them in Selenium. Furthermore, we can run first integration tests with other teams, other services and maybe third party software. For integration testing, we do not rely on Selenium alone. We have a wide set of so called CDC tests ( To explain this, let me tell you about one fundamental requirement to release automatically: that is the consequent use of

To explain this, let me tell you about one fundamental requirement to release automatically: that is the consequent use of

If the build passes this last test, it is good to be deployed to the live platform. To ensure, that our platform is always stable enough for a deployment we constantly monitor the servers and databases. This is (and needs to be) a shared team responsibility – just as any other step of the entire process. We achieved this by simply putting up a couple of monitors that are in the line of sight of every team member. Every day, we discuss the error rates and possible performance problems in front of the big screens. This general discussion and the come-togethers around the common screens enhanced our culture of constant monitoring. With more and more services being built we are now investigating ways to focus on the most important metrics. As the issues on our live servers are different every day, we cannot determine which metric “is key” for what service. Hence, we have to automatically analyse all our metrics and present only the most relevant ones to the team. The most relevant ones are usually the weirdest. Thus, our investigations currently go into the direction of anomaly detection.

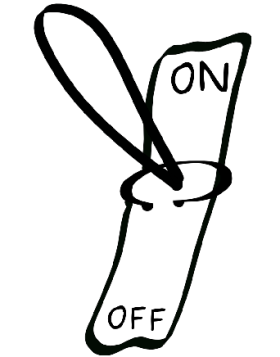

If the build passes this last test, it is good to be deployed to the live platform. To ensure, that our platform is always stable enough for a deployment we constantly monitor the servers and databases. This is (and needs to be) a shared team responsibility – just as any other step of the entire process. We achieved this by simply putting up a couple of monitors that are in the line of sight of every team member. Every day, we discuss the error rates and possible performance problems in front of the big screens. This general discussion and the come-togethers around the common screens enhanced our culture of constant monitoring. With more and more services being built we are now investigating ways to focus on the most important metrics. As the issues on our live servers are different every day, we cannot determine which metric “is key” for what service. Hence, we have to automatically analyse all our metrics and present only the most relevant ones to the team. The most relevant ones are usually the weirdest. Thus, our investigations currently go into the direction of anomaly detection. to keep the impact on any other system but the deployed one as small as possible. Having only loosely coupled services, removes the need to announce every deployment to all other (~dozen) teams. If other teams were affected by our changes and/or deployments we would have a fundamental flaw in our architecture (or in our CDC tests). To develop and enforce hard- or software locks at the end of the release process in order to limit the deployments is not a solution for this rudimentary architecture challenge. Hence, there is no need to announce deployments to the entire IT department. It is probably a good thing though to let the ops people know about our deployments in general. And one should also have a single gate that can be closed for all deployments if something is preventing deployments in general at a particular moment. Finally, the last thing we need is a deployment reporting for documentation purposes. This usually only includes what git hash/build version went live at what time including a changelog.

to keep the impact on any other system but the deployed one as small as possible. Having only loosely coupled services, removes the need to announce every deployment to all other (~dozen) teams. If other teams were affected by our changes and/or deployments we would have a fundamental flaw in our architecture (or in our CDC tests). To develop and enforce hard- or software locks at the end of the release process in order to limit the deployments is not a solution for this rudimentary architecture challenge. Hence, there is no need to announce deployments to the entire IT department. It is probably a good thing though to let the ops people know about our deployments in general. And one should also have a single gate that can be closed for all deployments if something is preventing deployments in general at a particular moment. Finally, the last thing we need is a deployment reporting for documentation purposes. This usually only includes what git hash/build version went live at what time including a changelog. As described above: the deployment to the live servers became an absolute non-event and thus there is nothing noteworthy for this blog entry for this step. After the deployment is finished, we run a small test suite to make sure that our code was successfully released and our core functionality is still in place.

As described above: the deployment to the live servers became an absolute non-event and thus there is nothing noteworthy for this blog entry for this step. After the deployment is finished, we run a small test suite to make sure that our code was successfully released and our core functionality is still in place.